TL;DR: Do not enable host caching on Couchbase data disks.

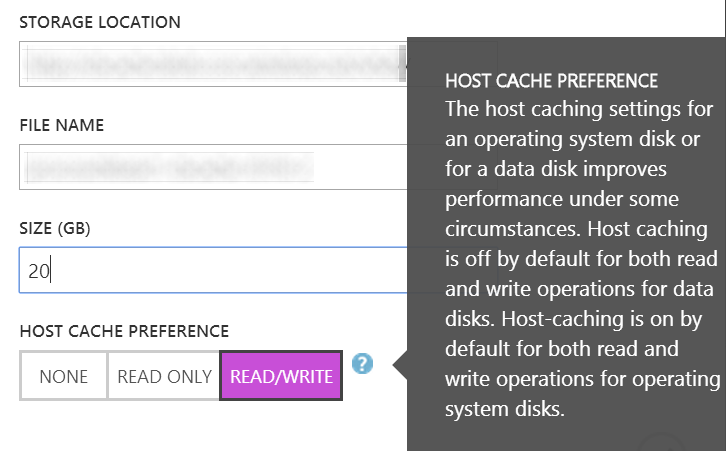

When creating a new disk in Windows Azure, you can choose whether to enable host caching or not.

It's generally assumed that for database workloads, it's best to run with caching off, but we couldn't find any actual benchmarks that showed the difference. Furthermore, Couchbase doesn't exactly behave like SQL server, as far as disk activity is concerned. So, we set out to see what, if any, effect the Windows Azure host caching has on Couchbase Server.

To make the test as simple as possible we used two identical large Ubuntu 12.04 VMs, each within its own dedicated storage account, with a single 20GB data disk attached. One VM had a data disk with host cache set to NONE, the other set to READ/WRITE. We mounted the data disks with defaults, noatime in fstab.

azureuser@cbcache:~$ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda1 29G 1.4G 26G 6% /

udev 1.7G 8.0K 1.7G 1% /dev

tmpfs 689M 248K 689M 1% /run

none 5.0M 0 5.0M 0% /run/lock

none 1.7G 0 1.7G 0% /run/shm

/dev/sdb1 133G 188M 126G 1% /mnt

/dev/sdc1 20G 172M 19G 1% /data

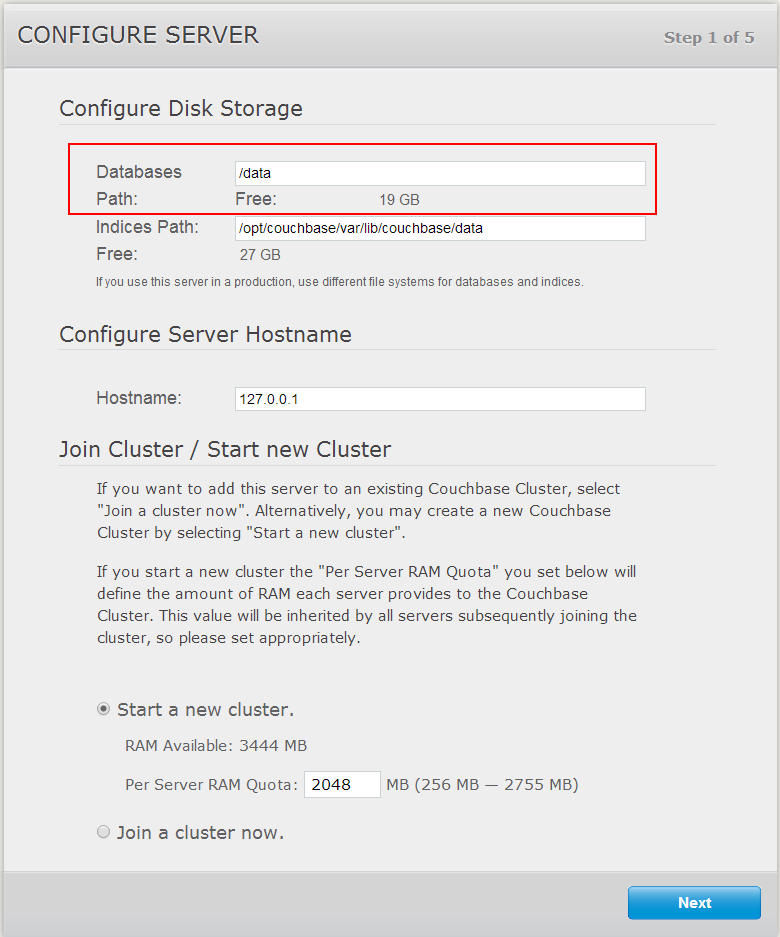

We installed Couchbase Server 2.5 EE on both VMs, with the data path set to the disk we want to test, and the index path set to the OS drive, since we weren't going to use any views anyway.

Write Test:

For the write test, we first used cbworkloadgen to create 2 million 4k JSON documents to set up the initial data set, then we used cbworkloadgen from two sessions simultaneously to repeatedly go over the data and update each document. With 2GB of RAM allocated for the bucket, 22% of the documents were resident in memory.

cbworkloadgen -t 10 -r 1 -s 4096 -j -l -i 2000000

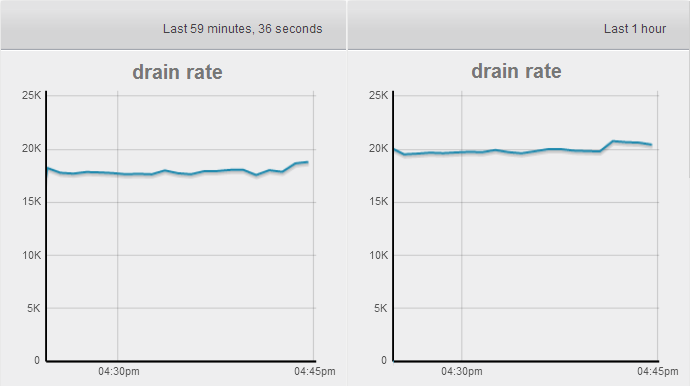

That's 10 threads, 100% writes 0% reads, 4096 byte JSON documents in an infinite loop, with keys from 0 to 2000000, times two sessions. We let this run for an hour.

The disk with the host cache enabled is on the left, without is on the right. As you can see, the write queue on the disk with cache enabled drained about 10% slower; 18.2k vs. 20.6k on average over an hour. At the end of one hour, the average age of the write queue was about 5 seconds for the cached disk, 2.5 seconds for the noncached.

(As a side note, the initial data loading also finished about 10% faster on the disk without caching.)

Just to be sure, we repeated the test with a python script inserting documents from 10 different processes, instead of cbworkloadgen, and got the same results - 10% difference in favour of the disk without cache.

Sequential Read Test:

For the sequential read test, we used a simple python script get documents one by one. We staggered the initial offset of each read process, so that all the reads were from disk, rather than from the working set cached in RAM.

Disk with cache enabled on top, without on the bottom.

Disk with cache enabled on top, without on the bottom.

As we intended, we had 100% cache miss ratio, meaning we were reading all documents from disk. Again, you can see a clear difference in favour of the disk without host caching, an average of 4.8k vs. 4.2k reads per second over one hour.

Random Read Test, 20% working set:

As expected, as soon as the working set was 100% cached in RAM, there was no difference between the two VMs, because they were not reading from disk at all. The VM without host caching was about 10% faster to reach that point.

Mixed Read/Write Test, 20% working set:

Same as the read test, and the write queue drained 10% faster on the disk without host cache.

We tried several other combinations of reading and writing, but we did not find a single instance where enabling host cache gave better results. The only scenario where we found host caching beneficial was restarting the Couchbase process - the VM with caching enabled finished server warmup faster.